Our Kubernetes Stack¶

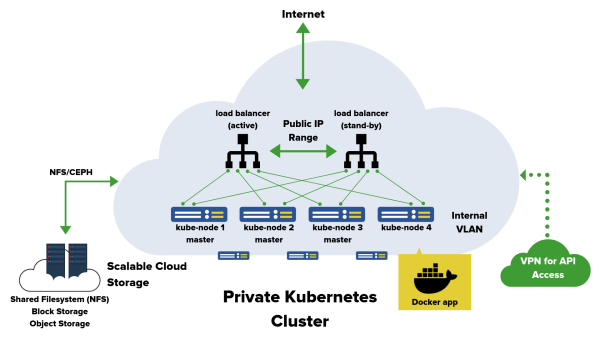

The service consists of HA load balancers and n Kubernetes nodes in cluster. The customer receives configuration with client certificate for the Kube API accessible via VPN (OpenVPN is used).

The network stack is managed by Weave, which Kubernetes configures automatically. Only the load balancers have a public IP address. Other servers are available on an internal network. Load balancers have is a HA IP address balanced between the two, that you should point your DNS records to.

We provide NFS, if you require persistent storage. It is possible to use nfs-client-provisioner or S3 storage usable via S3 API.

After you set up any services requiring a custom port for access, you need to provide our technical support a NodePort it listens on, so we can configure the load balancers.

Kubernetes cluster is not suitable for running database servers. Mainly due to the need for persistent storage, where in the case of NFS you need to take into account extra latency. For these purpose, we provide managed database servers that are optimized for maximum performance. The advantages of the managed solution are help with debugging (e.g. detecting slow queries, that impact application performance), automatic backups, monitoring and resolving service outages and other problems.

Division of competences¶

VSHosting¶

- HW and OS management of all Kubernetes nodes and load balancers

- Initial installation and basic configuration of Kubernetes and load balancers

- OS upgrades

- Kubernetes upgrades - with customer’s approvement

- Adding nodes to cluster

- Management of assigned public addresses and balanced ports on load balancers

- Kubernetes platform monitoring - HW, load balancers, Kubernetes API, storage

- Kubernetes cluster and load balancer backups

Customer¶

- Creating application Docker images

- Application deployment, including CI configuration

- Complete management of applications and services running in the Kubernetes cluster

- Configuring access to persistent storage in applications

- Backups of persistent storage application data

- Monitoring of applications running in the Kubernetes cluster

- Debugging of containers and services

- NodePort settings for applications requiring external access and contacting support to set up port forwarding